Labels are ordered by beauty, ranging from beautiful to ugly. The bigger, the more frequent.

We can compare the changes in Urban Elements (left) and Urban Design Metrics (right) for all beautified locations.

Blue bars indicate an increase in the beautified location, red bars indicate a decrease.

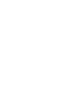

Images of urban places are rated by humans in pairs to determine, which one is more beautiful. We then transform these ratings to absolute ranks using TrueSkill.

These most beautiful and ugliest images are used as Training Data

A neural network is then trained on visual cues of beauty and ugliness.

An original (ugly) image is then used as Input for the Beautification Process. The network generates a template using Generative models, maximizing the visual cues for beauty in accordance with the network’s knowledge. Since this template does not (yet) look like an actual place, we search for similar images in our database to find the closest match.

Both, the original and the beautiful images are then analyzed: PlacesNet detects possible Scene types in the image. SegNet shows, what urban elements are visible in the image. Using these insights, we can calculate Urban Design Metrics.

As a result, we can display the visualizations above

AI algorithms have been used to predict whether people are likely to find a particular urban scene pleasant or not by learning from known human preferences. Indeed, there are specific urban elements that are universally considered beautiful: from greenery, to small streets, to memorable spaces.

A work by scientists at Nokia Bell Labs Cambridge have now gone beyond that by building algorithms that are able to automatically recreate beauty rather than simply assessing it. They built a deep learning framework (which they named FaceLift) that is able to both beautify existing urban scenes (existing Google Street views) and explain which urban elements make those transformed scenes beautiful.

The team assembled 20,000 images of Google street views that volunteers had labelled as beautiful or ugly. They then fed all these images into a computer running a deep learning framework – a kind of algorithm that mimics the human brain by processing data in neural networks. In so doing, the algorithm learned what humans thought was ugly or beautiful and, based on that, it was asked to improve an ugly scene, which it did using a generative adversarial network - a relatively recent class of algorithms that is currently used to recreate “fake” yet realistic human faces. The resulting images were then matched to the most closely corresponding images of real spaces. Finally, the algorithm explained how the addition and removal of specific urban elements had made the scene more beautiful.

FaceLift is able to generate beautified scenes extremely fast (in seconds) and at scale (for an entire city). User studies showed that, with FaceLift, urban planners ended up considering alternative approaches to urban interventions that might have not been otherwise apparent, and city residents were able to visually assess the impact of micro-interventions in their neighborhoods.

Find an interactive map here, while publications and datasets are available below.

Sagar Joglekar, Daniele Quercia, Miriam Redi, Luca Maria Aiello, Tobias Kauer, Nishanth Sastry

FaceLift: a transparent deeplearning framework tobeautify urban scenes

Royal Society Open Science, 2020

Tobias Kauer, Sagar Joglekar, Miriam Redi, Luca Maria Aiello, Daniele Quercia

Mapping and Visualizing Deep-Learning Urban Beautification

IEEE computer graphics and applications, 2018

Download models, data, and analysis code : From here